I’m using Unity 5.6.4 with ZCore version 5.0.0 (Runtime version: 4.0.3) with ZView version 5.0.0 (Runtime version: 4.0.2)

I enabled Transparency in zView because I need to use transparent shader for objects. Everything work perfectly on ZSpace screen, so on ZView screen with Standard Mode but not in ZView screen with Augmented Reality Mode. In MainCamera, if I enable “Allow HDR” option, zView will display double layers, one is Standard Mode, one is Augmented Reality Mode. If i disable “Allow HDR” option, zView will not clear frame in Webcam render area.

Please show me how to fix it.

*Note that : Everything worked on ZCore version 4.0.0 (Runtime version: 4.0.3) with ZView version 4.0.0 (Runtime version: 4.0.2), and Camera disable “Allow HDR” option.

pdate: I magaged to fix it by replace some script in VirtuaCameraAR and shader CompositorRGBA

I’m glad to hear you found a fix for your issue. I wasn’t able to reproduce on my end. We’re always on the look-out for fixes/improvements for future releases. If you happen to have a spare moment, we’d love hear some more detail about your solution!

Sorry for the late reply,

Actually, my problem is VR camera renders a white background with non-environment objects. This make transparent objects got problem when dragged to webcam area . I did a small change in VirtualCameraAR and CompositorRGBA shader so that camera don’t render the background.

VirtualCameraAR.cs: set only _compositorCamera.cullingMask when render non-environment objs

private void RenderRGBA(ZView zView) {

=======================================

// Render all non-environment objects including the box mask.

_secondaryCamera.clearFlags = CameraClearFlags.Skybox;

_secondaryCamera.backgroundColor = MASK_COLOR;

_secondaryCamera.cullingMask = _compositorCamera.cullingMask ; //<----

_secondaryCamera.targetTexture = _nonEnvironmentRenderTexture;

_secondaryCamera.Render();

=======================================

}

CompositorRGBA.shader : set mainColor alpha is 1 when maskDepth = 1

float4 depthMask(v2f pixelData) : COLOR0 {

float maskDepth = DecodeFloatRGBA(tex2D(_MaskDepthTexture, pixelData.uv));

float4 mainColor = tex2D(_MainTex, pixelData.uv);

float4 nonEnvironmentColor = tex2D(_NonEnvironmentTexture, pixelData.uv);

if (maskDepth < 0.999) {

return nonEnvironmentColor;

} else {

mainColor.a = 1; // <----

return mainColor;

}

}

Ive tried this method but i still have the problem where the screen isn’t being cleared.

heres what my code looks like

VirtualCameraAR.cs: set only _compositorCamera.cullingMask when render non-environment objs

private void RenderRGBA(ZView zView)

{

if (zView.ARModeEnvironmentLayers != 0)

{

// Update globals for the depth render shader.

Shader.SetGlobalFloat(“_Log2FarPlusOne”, (float)Math.Log(_secondaryCamera.farClipPlane + 1, 2));

// Perform a depth render of the mask.

_secondaryCamera.clearFlags = CameraClearFlags.Color;

_secondaryCamera.backgroundColor = Color.white;

_secondaryCamera.cullingMask = (1 << zView.ARModeMaskLayer);

_secondaryCamera.targetTexture = _maskDepthRenderTexture;

_secondaryCamera.RenderWithShader(_depthRenderShader, string.Empty);

// Render all non-environment objects including the box mask.

_secondaryCamera.clearFlags = CameraClearFlags.Skybox;

_secondaryCamera.backgroundColor = MASK_COLOR;

_secondaryCamera.cullingMask = _compositorCamera.cullingMask; //<----

// _secondaryCamera.cullingMask = _compositorCamera.cullingMask & ~(zView.ARModeEnvironmentLayers);

_secondaryCamera.targetTexture = _nonEnvironmentRenderTexture;

_secondaryCamera.Render();

// Perform the composite render of the entire scene excluding

// the mask.

_compositorCamera.cullingMask = _compositorCamera.cullingMask & ~(1 << zView.ARModeMaskLayer);

_compositorCamera.targetTexture = _finalRenderTexture;

_compositorCamera.Render();

}

else

{

// Perform a render of the entire scene including the box mask.

// NOTE: If no environment layers are set, we can optimize this

// to a single pass.

_secondaryCamera.backgroundColor = MASK_COLOR;

_secondaryCamera.targetTexture = _finalRenderTexture;

_secondaryCamera.Render();

}

}

CompositorRGBA.shader : set mainColor alpha is 1 when maskDepth = 1

float4 depthMask(v2f pixelData) : COLOR0

{

float maskDepth = DecodeFloatRGBA(tex2D(_MaskDepthTexture, pixelData.uv));

float4 mainColor = tex2D(_MainTex, pixelData.uv);

float4 nonEnvironmentColor = tex2D(_NonEnvironmentTexture, pixelData.uv);

if (maskDepth < 0.999) {

return nonEnvironmentColor;

}

else

{

mainColor.a = 1; // <----

return mainColor;

}

}

Hi Michael,

Can you help me understand what you’re trying to do here?

If you’re trying to stop drawing the clear color/skybox, that might not be worthwhile. The objects in the video are going to differ positionally from the 3d drawn objects because of the different camera angles, so you’ll be seeing offset doubles between the video and 3d representations of the objects.

im really just trying to use objects with transparency in the zview augment reality mode

perhaps i misunderstood the problem temp user was having and the solution you gave him.

the problem happens when you take a transparent object out of the screen in the augment reality view it maintains the same color as the background of the screen, which makes transparency un-usable in the z view mode.

heres the materials i used on the objects

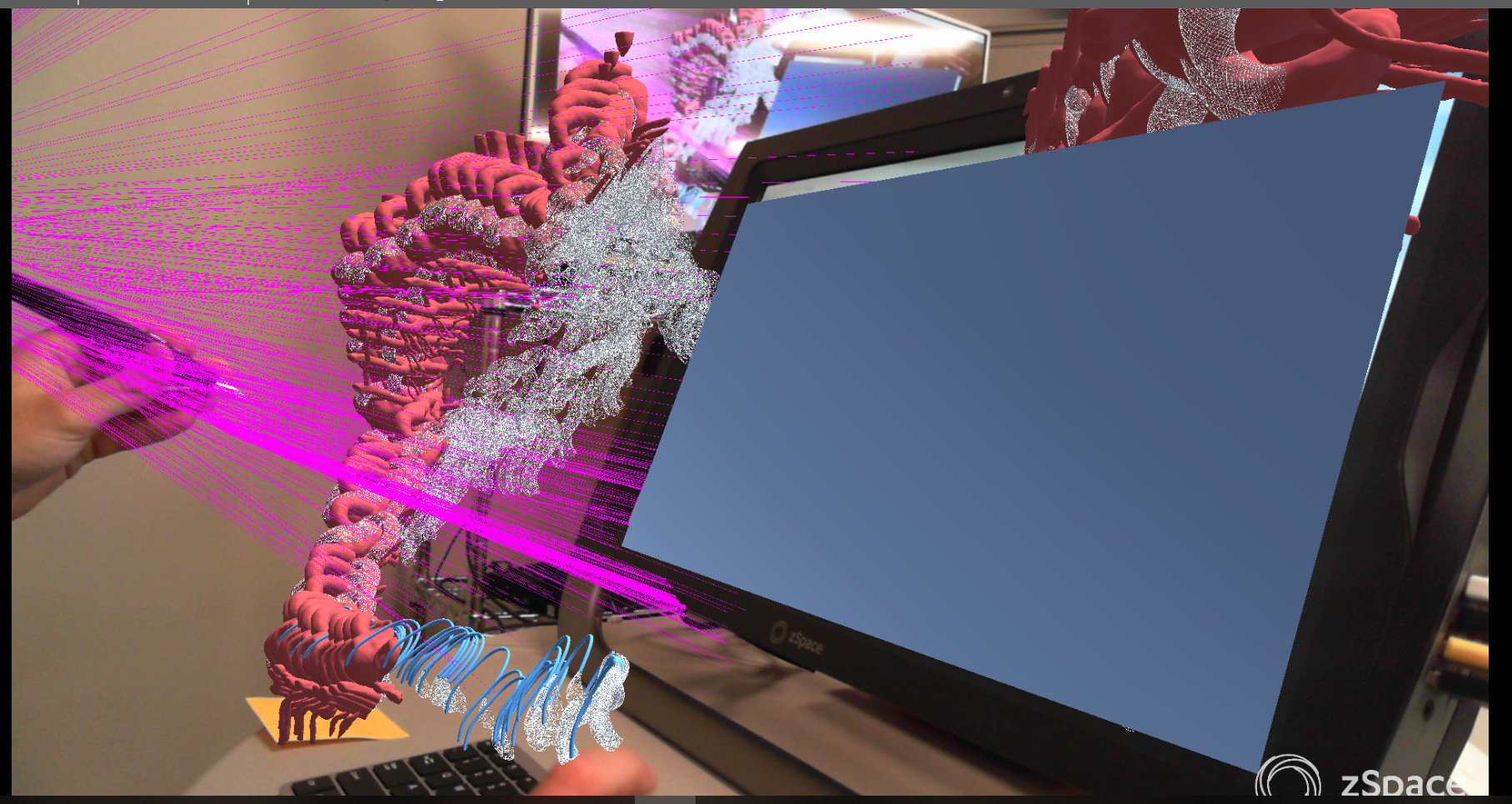

and heres what the objects look like in ar mode once pulled out of the screen

hey guys and progress on this issues?

Hey Michael,

As far as I know, that’s just how augmented mode behaves. I don’t believe we ever managed to make the webcam image draw behind transparent objects. It might be possible, but we don’t have the resources to throw at improving it at the moment.

Alex S.